Text analysis

Since we have a large dataset of review texts, it’s necessary for us to apply some NLP methods on the texts.

Review Keyword

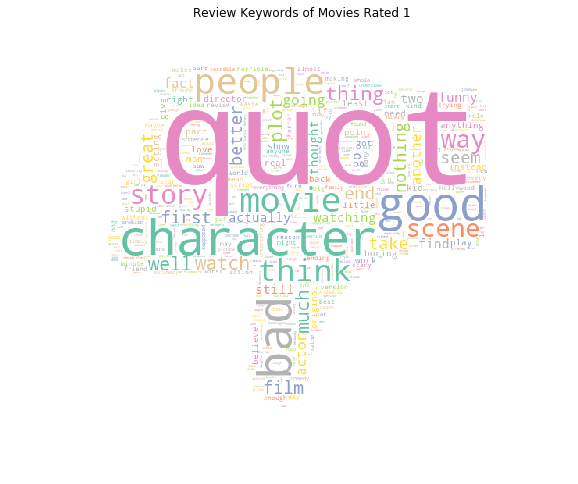

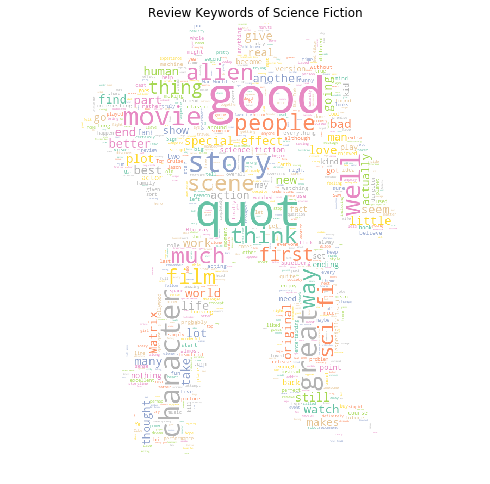

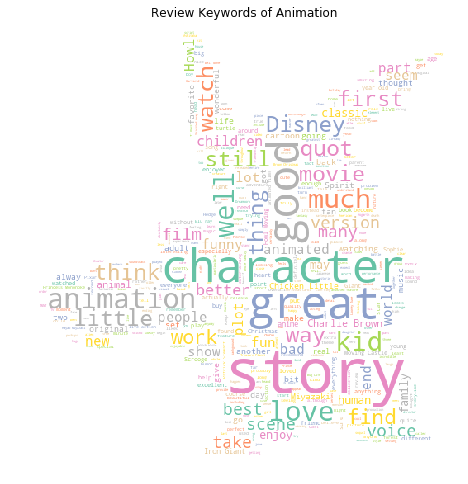

Firstly, let’s take a glance at the keywords in the review text. Review keywords of movies rated 1 and 5 can be visualized with the mask of thumb up and thumb down. We can also visualize the keywords of science fiction movies and animation movies with the masks of Transformers and Pikachu.

Well, from the visualization of review keywords, there are different specific words in specific genres (for example, ‘alien’ in science fiction and ‘disney’ in in animation). However, overall it’s hard to figure out the features of different reviews since mostly they contain similar words and we can not judge a review from the simple wordcloud figures. So later we will focus on more detailed text processing tools, nltk and make analysis inside the correlation between review text.

Sentiment Analysis

We have done sentiment analysis on each review text and got the corresponding positive, negative, neutral and compound scores. We calculated the average score for each rating group (from 1 to 5) to see what is the difference in sentiment of reviews with different ratings.

As we can see, as the rating goes up, the positive sentiment score is increasing and the negative sentiment score is decreasing. Overall, the compound sentiment score is increasing.

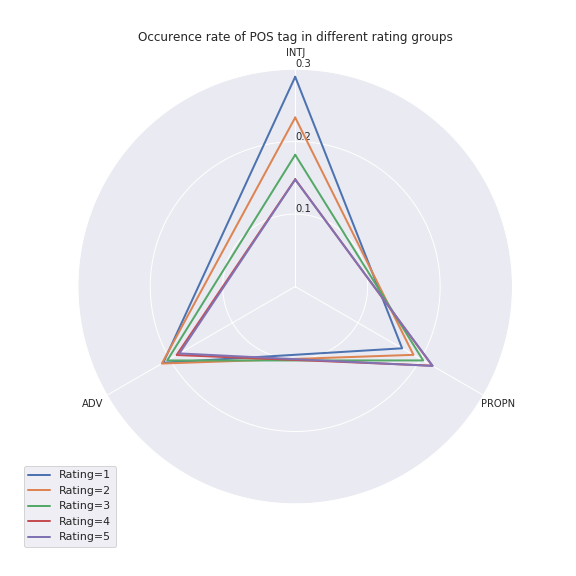

Part of Speech Tagging

We’ve tagged every word in the review texts with its part of speech(POS). We would like to know if all the POS tags have the same occurrence rate in each rating group. We normalized the occurence of a POS tag in a rating group by the total count of words in that group, and then calculated the distribution of each POS tag. Suppose a POS tag is uniformly distributed in each group, its percentage in each group should be about 20%. We made the boxplot of occurrence rate for each POS tag and the results are as follows:

There are some POS tags with a high variance. We choose the three POS tags with the highest variance - INTJ(interjection), PROPN(proper noun), ADV(adverb), and see how their occurence is different in each rating group.

The distribution in rating=4 and rating=5 nearly overlap with each other. Apart from this, as the rating goes down, the occurence of INTJ and ADV is increasing, and the occurence of PROPN is decreasing. Maybe the reviewers who give a high rating are likely to talk about the movies, directors and actors (both are proper nouns) while the reviewers who give a low rating like using interjections and adverbs to express their bad feelings. The difference in INTJ is rather clear - more interjections in the negative reviews.